I wanted to test out some of my ideas about ‘The Nature of the Screen’ as outlined in the Development Notes – from Paper on iPad Pro post. How do the group respond to and interact with the screens when their used in different ways?

Technical

For this demo I researched, tested and implemented different system configuration + coding approaches for a fullscreen window span across all monitors on the project’s new Mac Pro.

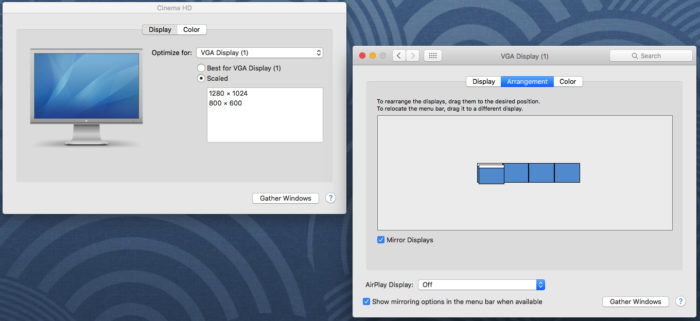

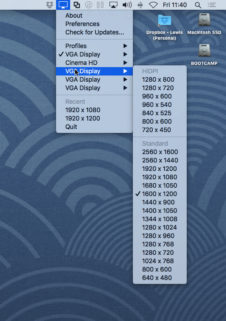

Adjusting screen arrangements + mirroring using the OS X System Preferences > Displays > Arrangement; installing the resolution switcher utility QuickRes to easily select resolutions for the multiple monitors and set up a Profile; and implementing “disable ‘Displays have separate Spaces’ in Mission Control preferences ‘ – as advised via https://forum.openframeworks.cc/t/multi-display-fullscreen-0-9-0-osx/22010 resolved most of the configuration issues and provided alternate and optimised oF code – although I also tested various screen control add ons such as ofxScreenSetup and alternate window configuration code found on blogs and forums elsewhere.

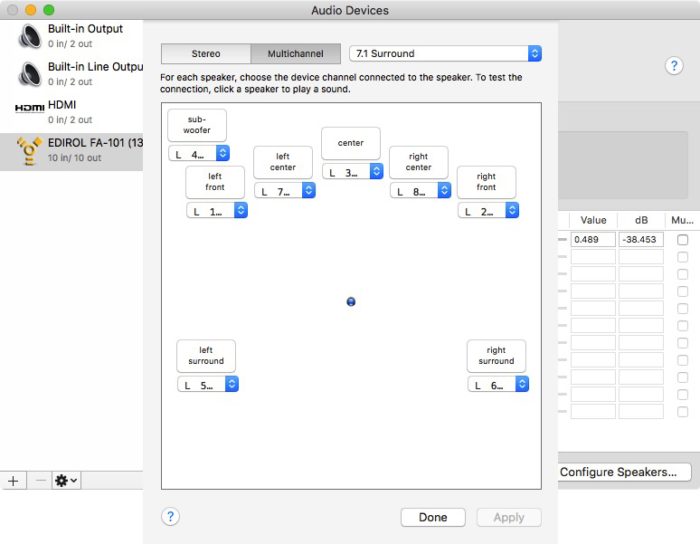

I researched and tested existing oF addons for multi-channel/speaker outputs such as ofxMultiDeviceSoundPlayer, ofxMultiSpeakerSoundPlayer and ofxSoundPlayerMultiOutput but settled on ofxFmodSoundPlayer2 – “a FMOD player for openFrameworks that can play up to 8 audio channels and select the desired soundcard”. Using it I realised pseudo spatialised audio of sound files loaded and triggered within oF through LEVEL’s quad system i.e. associating a speaker pair with each screen and routing audio – via an Edirol FA-101 sound card – from the Mac Pro into the 2 x stereo amps of the speaker system. ofxFmodSoundPlayer2 proved to be a bit quirky – but after a fair amount of trial and error and more research I realised that it worked in a quite particular way – and this required a custom configuration of the Edirol FA-101 sound card via the OS X Audio Devices configuration.

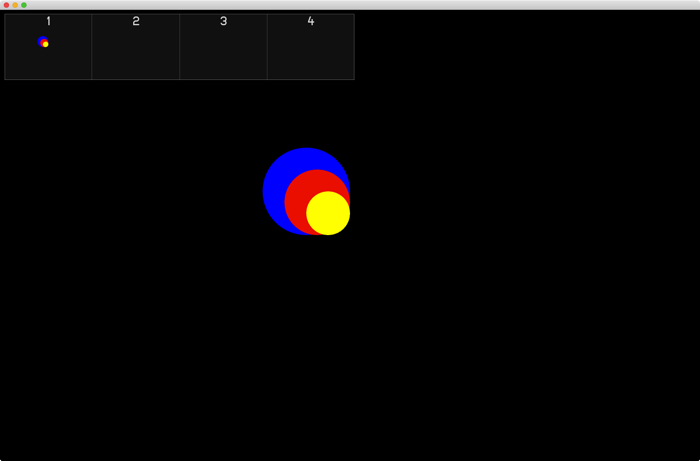

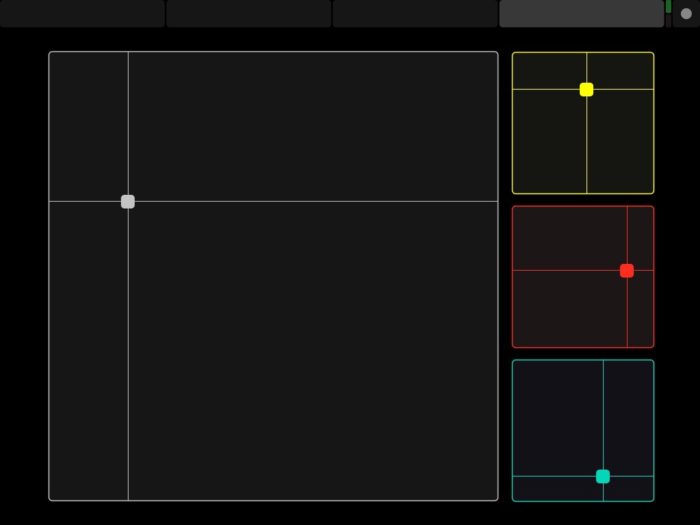

I also implemented a ‘viewport’ in the OF sketch to display the multiscreen output as an inset at the top of the ‘main’ screen – the mirrored ‘front’ projector/Cinema HD output – so that I could see a representation of the multiscreen output on a single or dual screen laptop at home. This idea came from research into existing multiscreen, spatialised audio setups elsewhere – and specifically from a video of the Simulator software for the ICST Immersive Lab – “an artistic and technological research project of the Institute of Computer Music and Sound Technology at the Zurich University of the Arts. It is a media space that integrates panoramic video, surround audio with full touch interaction on the entire screen surface”

Interaction

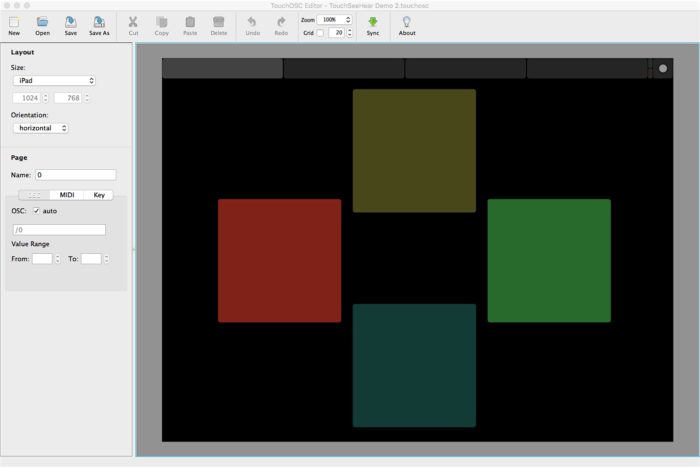

I refined the approach from Demo 1 of realising input from a TouchOSC interface on an iPad 2 into the oF sketch on the Mac via the ofxOsc addon and via the LEVEL Centre’s default Wi-Fi network – but developed and extended it significantly.

I wanted to see how the group responded to set ups where:

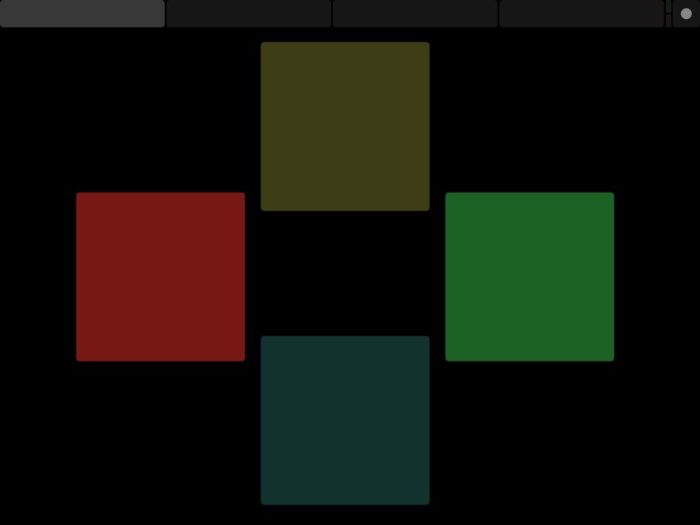

1. The screens are independent of each other – i.e. only one graphic per screen although the sound is located through the appropriate speaker pair

An iPad interface with 4 buttons – pressing a button triggers a graphic (a coloured circle) on the output screen and an associated sound.

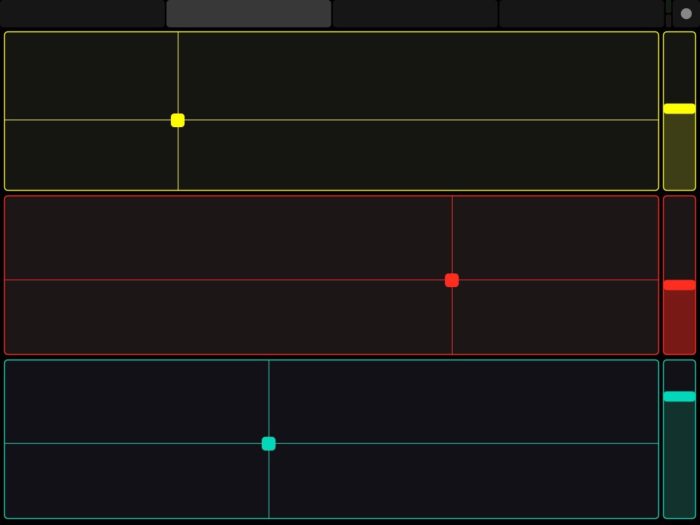

2. The screens are arranged like an extended desktop – you can move back and forth between the screens but can’t move from screen 4 to 1 or 1 to 4

An iPad interface with 3 x XY pads – X is position of the associated graphic (a coloured circle) on an extended desktop from screen 1-4 (and its associated sound to the relevant speaker pairs) and Y is its pitch/height on the screen + a fader for volume.

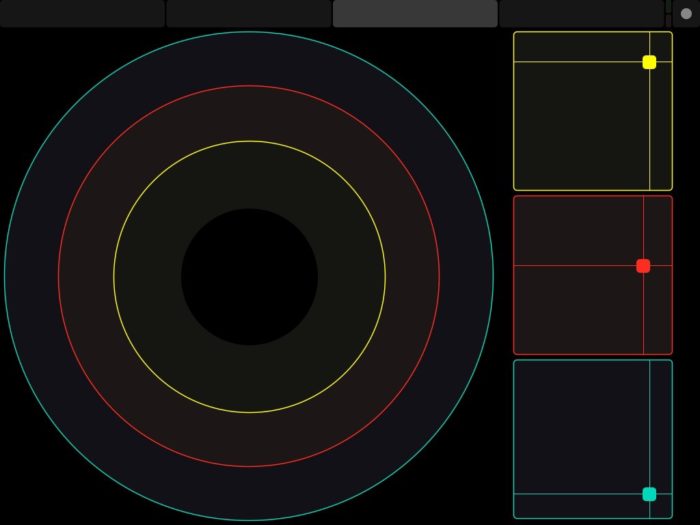

3. The 4 screens are essentially windows onto a virtual 360 degree panoramic but 2D world – you can move freely between them

An iPad interface with 3 circular encoders – spinning an interface encoder moves the output circle continuously around the 4 screens (and its associated sound to the relevant speaker pairs) + 3 x XY pads where Y is its pitch/height on the screen and X is volume/opacity.

4. The 4 screens are mirrors of the physical space – the output on the screens tries to reflect what it would look like if there were a physical mirror on each wall and three coloured balls were moved nearer and farther away from each mirror

An iPad interface with a multitouch XY pad for the position – Y for front/back and X for left/right – but requiring one finger for each of the 3 coloured circles + 3 x XY pads where Y is its pitch/height on the screen and X is volume/opacity.

Aesthetic

I selected fairly abstract drones and sound textures for each sound – but broadly in the frequency range of bass – blue, mids – red and treble – yellow + green – beat.

The simple graphics i.e. a circle, bold colours – yellow, blue, red, green; strong visual to aural associations e.g. loudness to transparency – the quieter a sound the more transparent it’s graphic appears on the screen; positioning the input elements on the TouchOSC interface and matching their colours with the outputs on the screen – all attempted to create a direct and obvious link between input and output.

Here’s a rough demo video of the TouchOSC interface and audiovisual outputs… note that it’s a single screen capture – so the multiscreen output can only really be appreciated in the viewport inset at the top of screen… also the audio is only stereo – so the spatialised audio to speakers 3 & 4 (i.e. the rear screen – screen 3) can’t be heard…